Ever lost three hours debugging a model pipeline, only to discover your dev stack had a silent bottleneck? You’re not alone. Over 70% of developers report wasted time due to poor automation support or hidden inefficiencies in their tech stack. Most guides repeat the same old names. You’re not here for that.

You want to move fast. Build smarter. Push clean releases without burnout.

The Best Rare AI App Development Tools

Some of the fastest releases in AI don’t happen in giant labs. They come from lean teams that use sharp tools others ignore. I’ve spent years optimizing product cycles, cutting build time, and stripping bloat. Below is the real list—fast tools that punch above their weight.

1. Turbo your LLM workflows with Grok 3 API

Forget clunky wrappers. Grok 3 API runs lean and clean. Built for developers who demand precise control, Grok 3 helps scale LLM pipelines without dragging infrastructure behind. You get:

- High-speed transformer execution

- Lightweight architecture

- Performance metrics in real-time

Perfect for devs who want total model governance without friction. Fewer calls. Less load. Faster iterations.

Pro tip: Combine Grok 3 with lightweight edge deployment for fast response loops in production.

2. CodeSquire: AI That Writes Like a Senior Dev

Tired of junior-level suggestions? CodeSquire writes high-level Python scripts and SQL queries that actually scale. It uses context in smarter ways than Codex or Bard ever managed.

Why it wins:

- Snaps into Jupyter, Colab, VS Code

- Knows dev shortcuts and import hierarchies

- Respects existing code patterns

Use it to prototype pipelines or auto-generate ETL logic. Saves hours when managing AI workflows with multi-source data.

3. Roboflow Universe: Beyond Image Labeling

You’ve seen datasets. But Roboflow Universe holds rare gems. The platform isn’t just for annotation anymore. It curates hard-to-find datasets trained for edge cases in manufacturing, security, agriculture.

Use cases:

- Build image classifiers for obscure object sets

- Speed up detection modeling without sourcing your own data

- Version, test, deploy models all in one pipeline

If you’re building custom vision systems fast, stop wasting time collecting junk data.

4. Superblocks: Drag, Drop, Ship AI Ops

Most AI backends don’t need to look fancy. Superblocks proves that by letting you wrap any AI model into a functioning UI or internal tool without a frontend team.

Why it deserves more love:

- Connects to Python backends instantly

- Handles auth, roles, and inputs without noise

- Launches in minutes

Spin up feedback dashboards, annotation tools, or test interfaces in hours. Let PMs test without pinging devs.

5. Ploomber: Pipeline Orchestration Without the Bloat

You need orchestration. You don’t need Airflow headaches. Ploomber is the lightweight, notebook-native pipeline manager that lets you:

- Track execution across steps

- Push parameter changes without rewriting

- Integrate CI/CD without glue code

Ideal for solo developers or fast-paced AI startups.

6. Valohai: CI/CD for ML That Doesn’t Break

Most CI/CD pipelines ignore model drift. Valohai builds clean, traceable deployment pipelines focused on AI workflows. The UI doesn’t pretend to know more than the devs.

Benefits:

- Total version control across experiments

- Built-in metrics, logs, and infra tracking

- No vendor lock-in

Save days on model release planning. Catch regressions before production hits.

7. Baseten: Serve Models Like a Pro

You trained it. Now what? Baseten serves ML models without ops drama. Deploy with zero setup. Monitor usage live. Optimize inference behind the scenes.

What makes it stand out:

- Inference graphs built in

- GPU scaling that actually works

- Clear pricing and control

Baseten fits when you outgrow Flask hacks but don’t need a full-scale ops team.

8. Galileo: Fix ML Errors Without Guesswork

Your model failed? Galileo tells you why. It surfaces noisy labels, bad slices, and input outliers that cripple performance.

Key features:

- Real-time error heatmaps

- Automatic data drift checks

- Bias and fairness analysis

Helps cut weeks of guess-debug cycles.

9. Tonic.ai: Smart Synthetic Data Generation

Don’t trust your test data? Tonic.ai crafts synthetic datasets that mirror real production patterns. Great for:

- Privacy-sensitive devs

- Simulating rare edge cases

- Fast testing without risk

No need to strip PII or wait for real users.

10. Continual: AI That Lives Inside Your Data Warehouse

Forget exporting. Continual lets you train, update, and score models right inside Snowflake, BigQuery, or Redshift.

Why that matters:

- No data duplication

- No external pipelines

- Full compliance alignment

Great for teams with strong warehouse culture and limited MLOps bandwidth.

Hidden Time Killers That Slow AI Delivery

Speed never depends on typing speed. Delays hide in places you overlook..

You waste time with bloated CI/CD systems built for web apps. Your model needs triggers, metrics, and rollback logic. Not just push-to-deploy. Manual metric checks cost days. Visual diff tools solve that in seconds. Writing batch jobs by hand? That’s dead weight.

Remove what slows you down before you add team members. from typing faster. It comes from knowing where delays creep in.

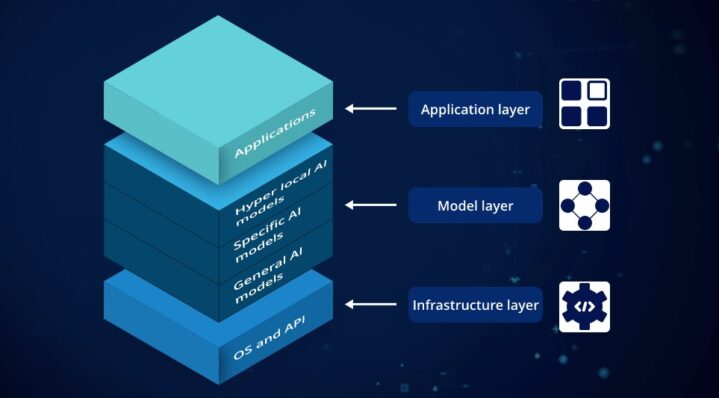

Why Most AI Stacks Fail Before Production

Great prototypes mean nothing if your system breaks on real data. Most AI builds collapse before launch because the stack was never built to scale. Pipelines work once, then fail silently under load. Metrics vanish when audit logs matter most. The issue isn’t your model—it’s your stack discipline.

Developers often patch gaps with quick fixes. But patches don’t survive production. You need versioning at every layer. Monitoring that tracks real signals. Testing that mirrors real-world failure. Tools like Valohai, Galileo, and Tonic exist to handle that weight without dragging performance.

Stop betting on brute-force coding. Start building systems that don’t collapse under pressure. You ship faster when your foundation holds.

Final Take: Build Smarter, Not Harder

Your workflow should accelerate you. Not trap you in feedback loops, bottlenecks, or bloated stacks. Most developers don’t fail because of weak code—they fail because of weak tool choice. Fast delivery dies inside cluttered environments and outdated habits.

Strip every piece of overhead that doesn’t serve output. That includes legacy CI/CD, slow labeling workflows, and inefficient deployment steps. The smartest teams don’t out-code the rest. They out-decide them.

Every tool in this list has a purpose. They remove friction at scale. They speed up review, testing, monitoring, or delivery. They don’t add steps. They remove them.

Speed doesn’t come from typing more. Speed comes from removing blockers. Most teams chase output with brute force. That approach burns time. What works is smarter choices, earlier. Be smart and choose sharp tools.